One Firewall. One Dashboard. Any Integration.

LLM Intercept is a firewall that inspects every AI request for secrets, PII, and prompt injection before it leaves your org.

Protect employees, apps, and APIs via Browser Extension, SDK, or Gateway.

Block secrets & PII before they ever reach LLMs.

Centralized logging & policies for audits.

Let teams use ChatGPT/Claude/Gemini without security tickets.

Four steps to complete protection

Browser extension, SDK, or Gateway routes each request to LLM Intercept first.

Secrets, PII, and prompt injection detected in <100ms using pattern matching + ML.

Block, redact, mask, or allow based on your policies.

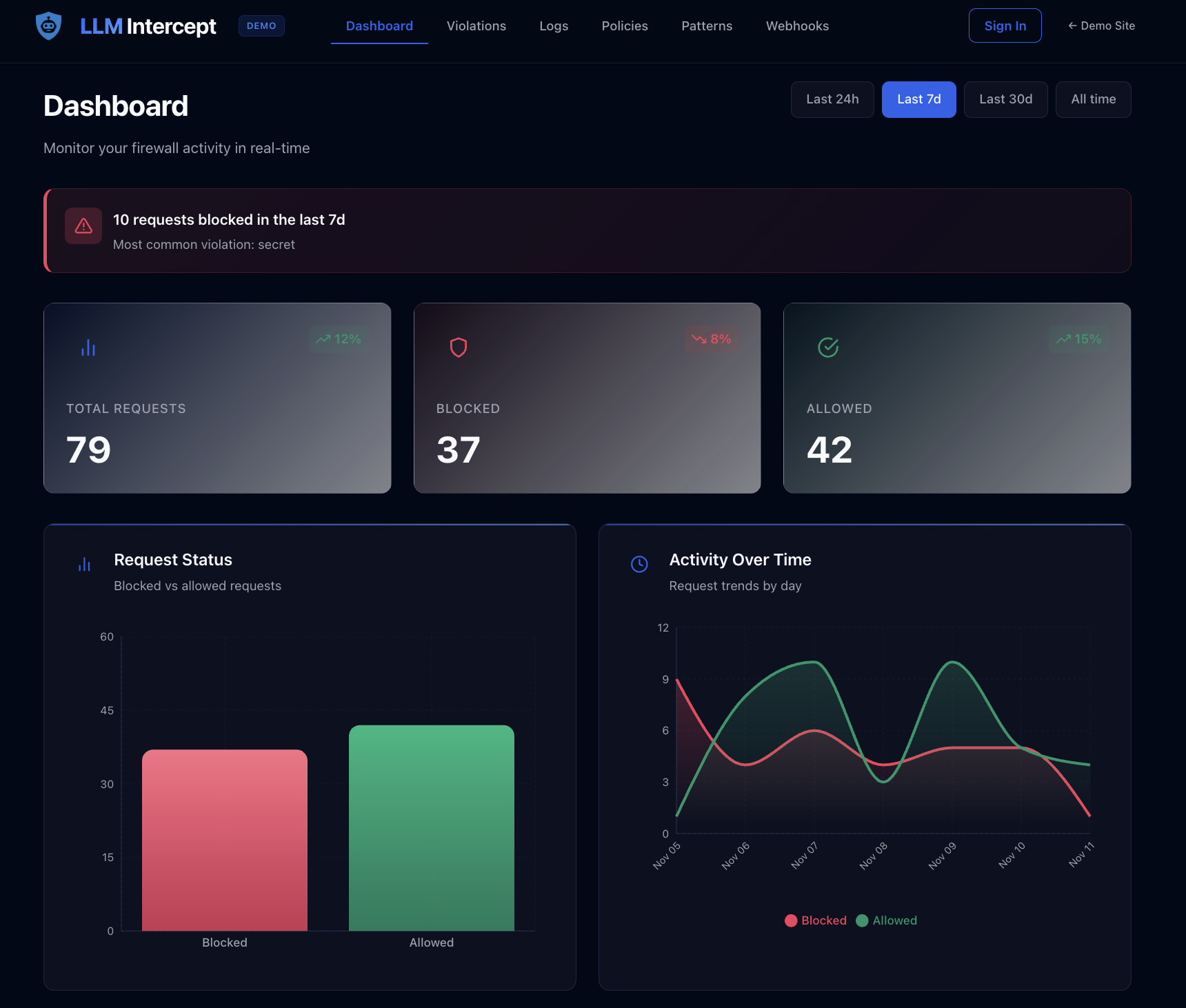

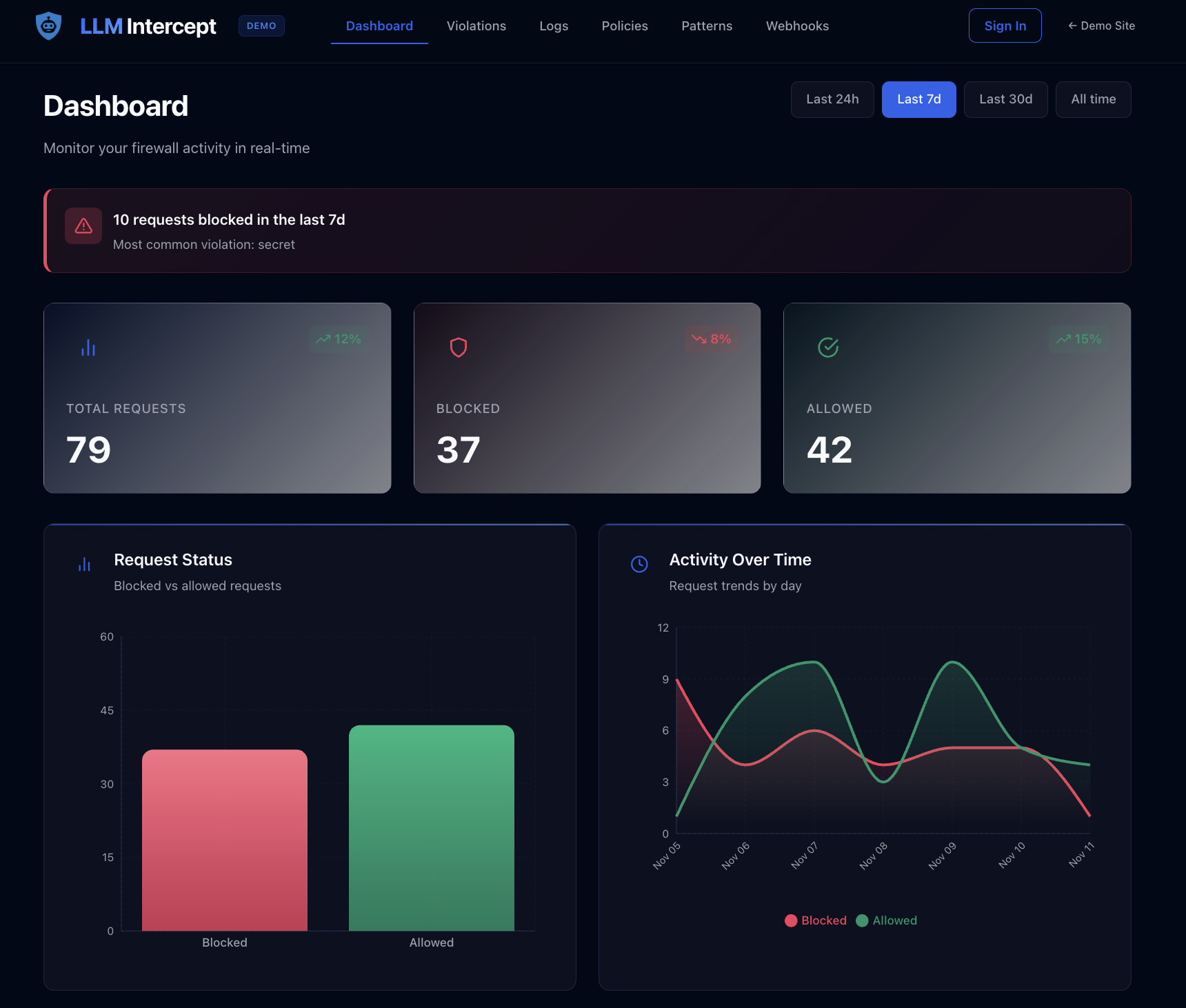

Security teams see all LLM usage: violations, users, apps, and models in one place.

See how the firewall protects against actual security threats in real-time.

Loading demo...

Vendor-agnostic security that protects regardless of which AI your team uses

Full support for OpenAI's GPT-3.5, GPT-4, and all models.

Complete protection for Anthropic's Claude models.

Full support for Google's Gemini Pro, Ultra, and all models.

Pattern matching + ML models + allowlist/denylist rules. Detects secrets, PII, and prompt injection in <100ms.

Tuned models minimize false positives. Whitelist patterns and custom rules prevent breaking normal prompts.

Deploy gateway in your VPC or self-host for complete data control.

No training on your data. Data minimization (truncate, hash) ensures only necessary content is scanned.

Request an enterprise demo